by Anthony Spaelti, Principal

As we outlined in a previous article, hallucinations are an inherent part of Large Language Models (LLMs) because of the probabilistic nature of those models. This is a feature and not a bug, as it aids these models in generating creative output – the original intent of most LLMs. For many, these occasional hallucinations are perfectly acceptable. However, for high-stakes applications like legal work, medical diagnosis, and financial compliance, even a small chance of error is unacceptable. We need solutions that are 100% reliable to achieve broad-scale adoption of AI in these applications.

One area of research attempting to solve this problem is Neuro-Symbolic AI. Let’s dive a little deeper into this approach and then discuss other potential contenders that might “solve” hallucinations in LLMs. One element all these solutions have in common is they are “hybrid” approaches – a combination of two or more kinds of AI models.

Neuro-Symbolic AI is actually an “older” idea that goes back to the 1990s and means integrating symbolic systems with neural networks. A symbolic system is a formal structure that uses rules to manipulate ideas or concepts. These rules are stored in a so-called knowledge base in a form a computer can understand them. In its simplest form, this knowledge base can be a simple text file.

Let’s get a little technical to really understand this. Here are two examples of symbolic rules and what they mean:

- ∀x (Mammal(x) → Breathe_air(x)) – This rule means for all examples x, if x is a mammal then x breathes air.

- Mammal(Whale) – This rule simply means “a whale is a mammal.”

We’ve now built a very basic symbolic system with which we can do real logical reasoning. For example, I could ask, “Does a whale breathe air?” – and instead of relying on just the neural net of a conventional LLM, we can also make use of these symbolic rules and can definitively say (and the model will 100% of the time answer in that way) “Yes, a whale breathes air.” Our model is able to create this logical reasoning because it knows all mammals breathe air, and it also knows a whale is a mammal; ergo, it must breathe air.

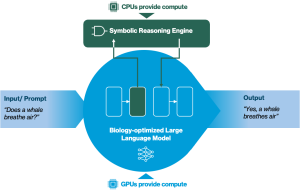

The integration between Neural-Net-based LLMs and Symbolic Systems conceptually looks a little something like this:

Figure 1: Illustration of a Neuro-Symbolic AI model

You have your standard prompt that goes into the LLM. Inside the LLM, we added a new part (the green area) that recognizes when actual logic is required and then calls the symbolic reasoning engine that does the logical inference before sending it back to the model. At this point, the model doesn’t modify the returned information but only adds it to the rest of the answer it was generating.

That way you have a 100% reliable AI model, at least for the fields on which the symbolic reasoning engine is trained.

So, if Neuro-Symbolic AI solves all of our problems, why isn’t everybody doing this? There are several big challenges still to be overcome before robust Neuro-Symbolic AI systems are ready for broad adoption:

- Bridging two different architectural paradigms is technically challenging. Neural networks represent knowledge as numerical weights and vectors, while symbolic AI uses explicit rules and symbols. Combining these without losing their respective strengths requires complex architectural designs. Engineers must ensure neural and symbolic components work together seamlessly, which is very difficult in practice. Researchers are exploring multiple possible solutions to this problem at an architectural level. One contender is integrating the architectures for neural net processing (so-called NPUs) and CPUs in one single chip, something our portfolio company Quadric is developing.

- Unifying the type of compute required. Neural networks require a lot of very small but parallel computing tasks, and GPUs excel at these parallel tasks. Hence the incredible success NVIDIA had the past few years. However, logic and rules-based systems perform best on so-called sequential computing, which are the conventional CPUs we all have on our laptops and phones. It’s hard to ensure seamless functioning between the compute needs of these different architectures without creating a lot of latency and skyrocketing costs. One possible solution to this challenge could be platforms that enable LLMs to run on regular CPUs without efficiency loss, something our portfolio company OpenInfer is working on.

- Building large logical knowledge bases has, thus far, been an expensive time-consuming manual effort. This holds true even if we only want to build a very domain-specific Neuro-Symbolic AI system, e.g., for biology, as our simple whale example and image above illustrate. We need to find efficient ways to handle large knowledge bases and keep them current.

While neuro-symbolic AI is 100% reliable, these challenges also make it very expensive, slow, and difficult to develop for mass adoption and scale—at least for now. Given the current state of research on this technology, we see it as unlikely that Neuro-Symbolic AI will replace the current LLM-based system across a broad spectrum of use cases. However, it may eventually be adopted in certain verticals where accuracy is critical, such as legal work or medical diagnosis.

It’s important to mention that Neuro-Symbolic AI is not the only Hybrid AI approach that aims to create 100% reliable AI models. We see promising developments in this area from other approaches as well. Two of such approaches are Logical Neural Networks (LNN) and Probabilistic Logic Networks (PLN). Like Neuro-Symbolic AI, both of these approaches combine deterministic/logical systems with probabilistic neural networks to try to address the three main challenges that Neuro-Symbolic AI currently faces. However, compared to Neuro-Symbolic AI, these approaches are more theoretical or academic concepts at this point.

Ultimately, while no single approach currently offers a silver bullet, these hybrid AI paradigms represent promising avenues for achieving reliability in high-stakes domains. Continued research and innovation will likely overcome current obstacles in the next few years, paving the way for broader adoption and trust in AI systems.